Diary Of A Breach

It's 10:00. Do you know where your data is? Before you answer, take a look at our intrusion time line

Our experience shows that attackers are more successful than most people like to believe--breaches that make the news are just the tip of the iceberg. Most go unreported, and few IT organizations have the know-how to cope with successful incursions. There are so many moving parts that incident response teams regularly make mistakes, and one miscommunication can cost thousands of dollars.

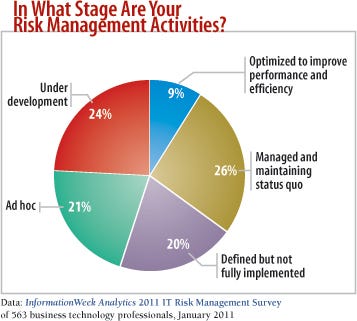

Juggling lots of moving parts can be a less dicey process with a comprehensive risk management framework, but few companies have such frameworks in place. Only 35% of the 563 respondents to our January 2011 InformationWeek Analytics IT Risk Management Survey say their programs holistically cover all the key risks related to IT, including operational, financial, and business. Just 9% characterize their programs as optimized. To show what can happen when various groups are working without an overarching security structure, we've created a breach diary. The company and details of the attack are fictional, but the mistakes are taken from actual situations. We'll lay out the scenario, and then dissect what went wrong and discuss the lessons learned.

Thursday

6:00 p.m. Everything seems normal. Meetings dragging on, expense reports submitted, clock ticking slowly as ever. Everyone planning for the upcoming weekend.

Friday

8:00 a.m.Network operations center (NOC) notices that a database server registered higher than normal resource utilization overnight. It appears to be a spike. As the support team begins to discuss, alerts come in for a network outage affecting the corporate office. The team pivots to respond. It resolves the issue, but in all the excitement, the database anomaly is forgotten.

10:00 a.m. Security team meets to review open items from the current week and set priorities for the upcoming week. The team reviews development defects, numbers of incidents, projects that need attention, and the ludicrously insecure requests made by other departments that were summarily denied. Everyone looking forward to the weekend. No one mentions that database server.

4:00 p.m. Reports submitted, emails answered, alerts investigated, and the day is more or less over. The team works the next hour to tie up loose ends, decide what tasks can wait for Monday, and solve the most pressing issue: where to go for happy hour. Life is good.

Saturday

2:00 a.m. NOC is paged to respond to another instance of high resource utilization on a database server. The database is queuing requests, production applications are timing out, and alerts are being triggered. As NOC staffers begin looking into the problem, they see that an Oracle process is consuming the majority of system resources and memory. They have no insight into why or how this is happening, so they escalate the issue to the database adminnistrator (DBA) on call.

2:30 a.m. DBA responds by email to the NOC. He instructs the NOC to restart Oracle, and if that doesn't solve the problem, restart the server. Says the team can call him again if these steps don't solve the problem.

3:30 a.m. NOC restarts Oracle and, just for good measure, the database server, to avoid having to call the DBA again. Team closes the alert, noting it was able to resolve the problem without re-escalating to the DBA. That will look good in the CIO's metrics, which count the number of times issues are escalated from the NOC to on-call admins. The team feels proud.

5:00 a.m. NOC begins receiving alerts for high resource utilization on the same database server the team thought it had fixed. The NOC determines that the DBA must be paged again, since this is a repeat issue.

6:30 a.m. DBA has logged in and removed the database server from the production pool. The redundant configuration will allow this server to sit quietly until Monday morning, when he can take a look at it without an impact on operations. The DBA demonstrates his abilities as the world's greatest father and husband, making breakfast for the family before taking the kids to the mall. Son gets a new video game, daughter wanders from store to store, texting her friends and wondering why she must spend all day with her family instead of doing whatever teenage girls do.

Monday

10:00 a.m. DBA begins researching the database issue and realizes the root cause was a large number of requests, both valid and erroneous, in a short period of time. He recognizes that the requests came from both a Web application and local accounts. Initially he believes the traffic may be legitimate, but soon realizes the queries have no business use. No one should have been performing such actions. The data being retrieved is highly sensitive--in fact, if the combined data is stolen, it could be catastrophic to the company. The DBA sees the hard reality: His database has been compromised.

10:15 a.m. DBA meets with the security team. Frantically, he explains the events of the weekend. Security counters with questions as to why he didn't respond to the alerts more proactively. Security team orders the database server disconnected from the network immediately.

10:30 a.m. Security team leaps into action. It assembles all the people needed to move the investigation along: full security staff, the DBA, system adnistrator, NOC staff, and CIO. While the response team is assembled, a member of the security team begins gathering information, including the queries performed and other data that may help in later phases of the investigation.

11:00 a.m. Everyone's been pulled out of meetings and assembled in a conference room. The incident response team is briefed on what's known thus far. CIO decides to contact the head of the legal department and the COO, as a precaution. COO asks to be informed of the team's progress every 30 minutes. As if the team doesn't have enough to do, now it must train and debrief the COO--in real time--on both the compromise and the technology involved. Where was this guy when the security team was asking for additional money for protective technologies?

11:30 a.m. Response team is working to piece together the information it has. The trail begins when the first alert of performance issues was received by the monitoring system--this is the only clue that might indicate when the attack began. Next, the team searches through network- and host-based intrusion detection, file integrity monitoring, antimalware, Web access, and Web application firewall logs. It attempts to correlate the log data, looking for anything that came in to the Web servers and then went on to the database server. It also look for data that may have been sent from the database or Web servers. Log analysis involves processing a lot of data and requires several people with specialized knowledge of applications and databases.

1:00 p.m. Team skips lunch to continue the investigation. Tempers flare. Everyone's cranky. Opt for a late lunch break so everyone can focus, perform better, and avoid a strike.

2:00 p.m. After a welcome visit from the pizza delivery guy, team digs back in. It identifies a series of probing attempts against various Web applications and narrows the investigation down to a single SQL injection attack against the custom-built content management system. A flaw in the CMS code apparently has given the attacker full database access, including all hashed passwords. The team now recognizes that everything within the CMS has been queried and should be considered compromised. The CMS server is removed from the network, and the team disperses to change all the CMS passwords and patch the vulnerable section of code.

3:00 p.m. CMS is secured, and the team believes the compromise has been contained. But in reality, nobody is sure.

What They Did Wrong

This fictional scenario illustrates several major, and common, mistakes. Some of these are obvious--others, not so much.

Error: The NOC failed to respond to a performance alert because it was tied up by a separate, seemingly unrelated issue. This could have been a staffing problem--maybe there weren't enough people available to respond to both situations--or maybe the performance problem was simply forgotten. If the team had responded earlier and recognized that the performance issue was highly anomalous, the security investigation might have started sooner.

Lesson: All such anomalies should be investigated, diagnosed, and resolved--not just for security's sake, but for operational reasons. What if the performance problem was an early warning of some other operational issue that led to a large outage and lost revenue? Respond to and diagnose all events; if you're vigilant, potential security issues will surface faster, and you'll have a better opportunity to limit damage and investigate the breach.

Error: The sloppy response and troubleshooting carried over to the DBA, who should not have advised restarting Oracle and the server as the fix. He should have requested enough information to determine whether there had been many large queries, which is often a tip-off that something fishy's going on.

The DBA was not fully engaged in resolving the problem. Even after the issue persisted--presumably because the attacker came back to rerun failed queries--the dad of the year simply removed the server from the production pool and opted to wait until Monday to investigate. Unfortunately, at that point, the compromise had already occurred and continued to go unnoticed, giving the attacker more time to gather data (and do who knows what else, even beyond the initial server) while the DBA hung out at the mall.

Lesson: Once the DBA determined the system had been compromised, he did the right thing by going straight to the security team. Unfortunately, many security pros react too quickly and attempt to place blame--in this case, they pointed fingers at the DBA for not responding more proactively over the weekend. While the DBA should have done more sooner, it's important to get past the blame game and focus on the root cause and real guilty party: the attacker. For security to loudly announce all the mistakes the DBA and NOC team made while working on the incident serves only to discourage and embarrass people, while distracting from the mission at hand.

"The first step is to stay calm" says Vincent Liu of security consultancy Stach and Liu. "Think through the situation, understand what is known, and identify your goals before you proceed. Some organizations will prioritize ensuring customer data is protected, while others will prioritize preventing purchase fraud. Once you understand your prioritizes related to the incident, ca.m.y determine the proper course of action, and execute."

Error: After the initial incident report is received, the team must meet and inform the correct people. This is a tricky part of the process. Key executives need to know what's happening. At the end of the day, they're responsible for the company and the outcome. At the same time, you must properly communicate to executives their roles and what various teams need to do to complete the investigation. No one needed the distraction of briefing the COO every half hour.

Lesson: To succeed, include on the response team only those who need to be involved at a tactical level, and minimize distractions. This can be difficult if executives insist on being involved--the CIO or CISO should run interference so the people actually performing the investigation can focus.

Error: Working through the investigation while it's still fresh is important. But some things can't be skipped. As minor as it may seem, lunch is important, as is rest. If people are hungry or tired, they will become impatient and frustrated, and make mistakes.

Lesson: Incident response teams should work in shifts, avoid skipping meals, and sleep when they can during all-nighters. It's vital that the quality of work is never called into question; the evidence your people gather could end up in court.

Don't Pull That Plug Too Fast

Realize that mistakes will happen, and have best practices in place to minimize their impact. For example, a key tactic during an investigation is to disconnect or isolate compromised hosts from the network to minimize further damage. There are two schools of thought. One says, do this as fast as possible; the other says, leave the host in place for a while to analyze connection attempts and see what systems the attacker is trying to contact. "Organizations must minimize exposure," says Dean De Beer of malware investigative firm ThreatGrid. "While gathering data is beneficial, the organization must decide when to isolate known compromised hosts."

In our fictional scenario, the team disconnected compromised hosts as soon as it could. An alternate approach is to restrict network access to and from the host, so potential outbound communications will still be initiated by any programs left behind while ensuring data can't escape and communications can be logged. Don't be too eager to shut down the system and grab an image, or to pull the network cable. Weigh the need for more intelligence against the possibility that system data will be destroyed or altered.

In addition, after identifying the host as compromised, the system can no longer be trusted. It should not simply be patched and placed back into production--you'll never know if you have truly regained control or if the attacker installed a hidden backdoor or malware. Since you can't know the full extent of the compromise, you should use a new system restored from a known-good state.

Even once you've identified the primary compromised host, don't be in a big hurry to wrap up the investigation. Take time to review all relevant logs to understand what systems the compromised host interacted with. Then review these systems for compromise too.

"Only in the smallest of incidents will the full extent of compromise be known," says Liu. "Organizations must identify the compromised areas, where they can be, based on information, time, and budget available."

At some point, you'll need to decide how long to keep the response team working, knowing it may not yet have found all the compromised systems. Eventually you'll enter a post-breach operations phase. At this point, implementing more logging and monitoring of potentially compromised systems, applications, and network segments will be valuable.

Plan for the Unplanned

Security incidents don't follow a script. Be prepared for many possible scenarios that can lead to detection of a breach, and have a plan in place for a thorough investigation. In many cases, following through on seemingly routine operational problems can detect security incidents early. Policies defining proper logging and alerting procedures, methods to provide insight to network events, and vigilant response by security and on-call staff are a must for all companies. The time to implement tools like data loss prevention or define a risk management framework is before you need them.

Cutting corners, or budget, in areas related to incident response is easy to do. But we've seen many companies pay dearly for these cuts when an attack happens and teams lack investigative insight and tools, and thus are unable to determine what occurred. Properly equipped, staffed, and trained incident responsive teams will resolve incidents faster and more thoroughly, and could save millions.

Accept that mistakes will be made, either before the investigation or during the process of finding the root cause of the incident, determining the severity, and stopping the compromise of systems and data. After the incident is concluded, do a postmortem. Use errors to improve your processes and identify new tactics that could have averted problems, because if there's one sure thing in security, it's that the next potential attack is on the horizon.

Adam Ely is director of security for TiVo. He previously led a software development group at Walt Disney Co., where he implemented secure coding standards and source code analysis processes. Write to us at mailto:[email protected].

Dark Reading April 11, 2011 Issue

Download a free PDF of Dark Reading January Digital Issue

(registration required)

About the Author

You May Also Like