AI for Cybersecurity Shimmers With Promise, but Challenges AboundAI for Cybersecurity Shimmers With Promise, but Challenges Abound

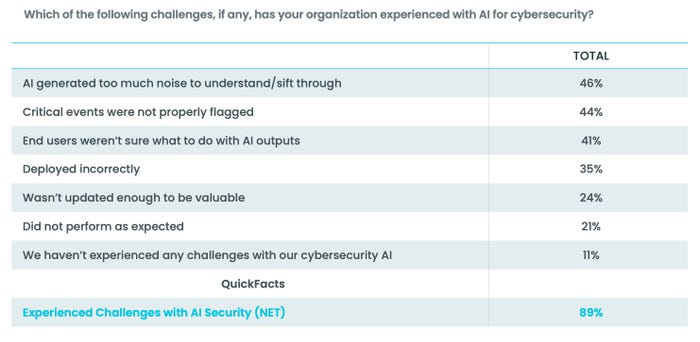

Companies see AI-powered cybersecurity tools and systems as the future, but at present nearly 90% of them say they face significant hurdles in making use of them.

May 4, 2022

Companies are quickly adopting cybersecurity products and systems that incorporate artificial intelligence (AI) and machine learning, but the technology comes with significant challenges, and it can't replace human analysts, experts say.

In a Wakefield Research survey published this week, for example, almost half of IT security professionals (46%) said their AI-based systems create too many false positives to handle, 44% complained that critical events are not properly flagged, and 41% do not know what to do with AI outputs. In total, 89% of companies reported challenges with cybersecurity solutions that claimed to have AI capabilities.

Source: Devo

Not all AI-based projects are created equal, as some technology is more mature, says Gunter Ollmann, chief security officer at Devo, which sponsored the survey.

"When they talk about rolling out AI for cybersecurity ... those are the projects that are commonly failing," he says. "In particular, NLP [natural language processing] is being dangled as a technology that can do cool things for your security and as a way to manage your attack surface area, but it has not really been successful."

The Promised Benefits of AI for Cybersecurity

Incorporating machine-learning and AI features into cybersecurity products has been widely heralded as a way for companies to gain more visibility into attacks on their data and infrastructure, and as a way to respond more quickly. In December, for example, business consultancy Deloitte called the trend toward more AI in cybersecurity to be a way of taming the complexities in today's business infrastructure, which includes more devices, more cloud services, and more employees working from home.

"Cyber AI can be a force multiplier that enables organizations not only to respond faster than attackers can move, but also to anticipate these moves and react to them in advance," the consultancy stated.

The most mature uses of machine learning in cybersecurity products are for the detection of threats on the endpoint and user and entity behavior analytics (UEBA) to pinpoint risky users and compromised devices, says Allie Mellen, security and risk analyst for Forrester Research, a business intelligence firm.

AI Security Challenges Abound

While finding and classifying IT assets topped the list of uses of AI-based cybersecurity — with 79% of companies using the technology for that application — it also topped the list of challenges, with 53% of respondents considering the use case problematic, according to Wakefield Research's survey of 200 IT security professionals.

About a third of companies had challenges in correctly deploying the systems, and a quarter criticized the vendors for not updating the product enough to be valuable.

There are definitely differences in opinions between business executives, who largely consider AI to be a perfect solution, and security analysts on the ground, who have to deal with the day-to-day reality, says Devo's Ollmann.

"In the trenches, the AI part is not fulfilling the expectations and the hopes of better triaging, and in the meantime, the AI that is being used to detect threats is working almost too well," he says. "We see the net volume of alerts and incidents that are making it into the SOC analysts hands is continuing to increase, while the capacity to investigate and close those cases has remained static."

The continuing challenges that come with AI features mean that companies still do not trust the technology. A majority of companies (57%) are relying on AI features more or much more than they should, compared with only 14% who do not use AI enough, according to respondents to the survey.

In addition, few security teams have turned on automated response, partly because of this lack of trust, but also because automated response requires a tighter integration between products that just is not there yet, says Ollman.

Augmentation, Not Replacement

Another dimension of the AI challenge is a lack of clarity on its role vis a vis security staff. While AI applications can deliver real benefits, it's important to understand that they augment the human analyst in the security operations center (SOC) rather than replace them, Forrester's Mellen stresses. That's a conclusion many companies likely do not want to hear, given the problems many have in hiring knowledgeable cybersecurity professionals.

"The most important resource that we have are the people that we have working today in the SOC," Mellen says. "AI helps, of course, for detection ... but we have to recognize that it is not going to be a complete game changer for security. The way that we use machine learning today is not even at the point where it would be able to take over most of the responsibilities of the human analyst."

Devo's Ollman also cautioned that organizations need to have realistic expectations for what AI can and cannot do.

"I think there is the sci-fi view that AI will be a superhuman that operates at 1,000 times faster than the best human alive; leave that for the sci-fi books," he says. "Augmentation is about how do I make the human faster and more efficient in what they are doing, and also closing skills gaps that they have."

The challenges all suggest that trained human analysts, with expertise in machine learning and AI, are needed to make best use of AI-augmented cybersecurity products. And AI systems that can explain their conclusions will be necessary in the future to augment human analysts and retain trust, Ollmann says.

About the Author

You May Also Like

_marcos_alvarado_Alamy.jpg?width=700&auto=webp&quality=80&disable=upscale)