As Artificial Intelligence Accelerates, Cybercrime InnovatesAs Artificial Intelligence Accelerates, Cybercrime Innovates

Rare government, industry alignment on AI threats means we have an opportunity to make rapid strides to improve cybersecurity and slip the hold cybercriminals have on us.

Cybercrime might be the world's fastest-growing entrepreneurial venture. Over the past decade, no enterprise — licit or other — has flourished quite as ably. If cybercrime were endowed a nation and granted a gross domestic product (GDP), it would rank as the world's third largest economy, behind only the United States and China.

This is no drill or caprice: According to new data from Verizon's "Data Breach Investigations Report," the average cost of a data breach is now $4.24 million, up from $3.86 million in 2021, with ransomware accounting for one out of every four breaches.

The growth of cybercrime was foreseeable. Criminals are known to be early adopters of new technologies, and there are rich targets available. Much has been also made about the prowess of cybercriminals and the maturity of their enterprises. But it's worth bearing in mind that – however clever they may seem – cybercriminals need to be just innovative enough to overcome defenses.

Generative AI Enters the Room

The acceleration of generative artificial intelligence (gen AI) is set to amplify present conditions. For example, we already know that cybercriminals are using AI to create malware and deepfakes, improve disinformation efforts and phishing emails, interfere in elections, and even discover new vulnerabilities to attack.

And for every positive use of novel technologies in cyberspace, those charged with defense are obliged to imagine and protect against negative possibilities.

Companies at the vanguard of AI — particularly those that develop large language models — have policies to guard against unethical use of their technologies. Guardrails are a necessity, but the universal truth remains that a lock only keeps out an honest person.

Amid all the hype and unease about generative AI, cybersecurity companies haven't been sitting still. Rapid developments are taking place to innovate with AI, helping mitigate against risks and blunt cybercrime's ability to advance. AI shows promise in cleaning up old, vulnerable codebases, detecting and defeating scams, continuous exposure management, and improving prevention.

Rare Alignment of Concern and Opportunity

Historically, industry has been vocal about government regulation that might interfere with innovation. As with any complex system, there's a dynamic at work that is now showing value in the interplay between regulation and innovation.

With cybersecurity and AI, what may be entirely novel is that today industry leaders in the United States and Europe have largely dispensed with negative views of regulation and are actually ahead of the curve, asking for regulation now.

This bears repeating: Thought leaders, politicians, regulators, and executives in North America, Europe, and around the world are now actively engaged in the prospects for artificial intelligence. Frameworks have been developed for risk management, and policies and laws are rapidly coming into view. In the United States, NIST has developed new guidance to "cultivate trust in AI technologies and promote AI innovation while mitigating risk." In Europe, ENISA has published proposed EU standards on cybersecurity, and the Artificial Intelligence Act looks likely to become law, with wide effects for all.

Source: NIST

No Longer Waiting for Change?

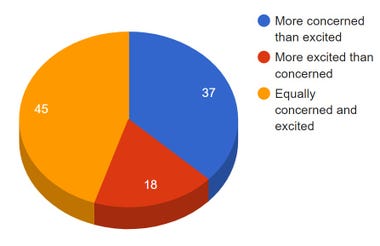

There's also an apparent shift in how people are coming to perceive technology risk. Last year, the Pew Research Center reported that Americans are decidedly worried about the proliferation of AI. The reasons for alarm may fall into customary categories of human vs. machine, fear of economic displacement, and job loss. But there are also profound concerns about AI and existential threats, many of which have been echoed by notable AI researchers and industry leaders.

Americans' perceptions of AI. Source: Data from Pew; drawn by the author

Taking a global perspective on perceptions of overall risk, the World Economic Forum's 2023 Global Risks Report places cybersecurity as the 8th biggest risk in terms of severity of impact, both over the next two years and the coming decade.

Whatever the reasons for concern, there appears to be a massive cultural shift that industry and government should act upon.

Shifts in Awareness and Risk Culture

Culture plays a vast (though largely unheralded) role in how we can combat cybercrime. Consider, for example, the traditional view of cybersecurity as something handled only by the IT department, an assumption that has underscored divides between cyber policy and actual practice and helped build cybercrime. The divide has been the source of many challenges, not least of which is aligning business and organization priorities and risk management. After all, if IT was handling it, why should the rest of the organization be concerned? Then, when employees were trained to handle phishing and business email compromise, it increased the risks of creating a blame culture instead of instituting solutions.

With new awareness of the risks and opportunities inherent in AI and cybersecurity, companies and governments need to seize this moment to further develop mutual trust. A mix of education and improvements in security culture is apt to have profound ripple effects. To improve security, we now have an opportunity to do better by augmenting human intelligence with machine-speed artificial intelligence.

The Urgency in Doing

With shades of the maxim uttered by Uncle Ben to Peter Parker, "With great power comes great responsibility," the Biden administration is radically shifting from the past decades' views by placing emphasis on those who can do the most to improve cybersecurity. AI will be critical to these efforts.

While uncertainty abounds, there's a rare alignment of interest and perceptions that points to an occasion to act. Industry's proposed six-month pause on AI developments may be just such an opportunity. While cybersecurity should now be in everything we do, the social contract is changing, and responsibility for cybersecurity is coming to those who are best poised to do something. In the spirit of Leonardo Da Vinci's "urgency in doing," we now have the possibility to make rapid strides to improve cybersecurity and slip the hold that cybercriminals have on us.

About the Author

You May Also Like

_marcos_alvarado_Alamy.jpg?width=700&auto=webp&quality=80&disable=upscale)