Cybersecurity In-Depth: Getting answers to questions about IT security threats and best practices from trusted cybersecurity professionals and industry experts.

How Are Modern Fraud Groups Using GenAI and Deepfakes?How Are Modern Fraud Groups Using GenAI and Deepfakes?

Fraud groups are using cutting-edge technology to scale their operations to create fake identities and execute fraud campaigns.

Question: How are modern fraud groups using generative artificial intelligence (GenAI) and deepfakes to steal millions of dollars?

Answer: If you imagine a fake identity document, what springs to mind? Is it a faded World War II-era identity card with a false name written in neat script next to a black and white photo? Is it a driver's license from a state you had never actually been to with your photo and a fake name, address, and birthday that you showed to the bars in your college town so you could drink when you were underage? Sometimes those documents worked. Sometimes they didn't.

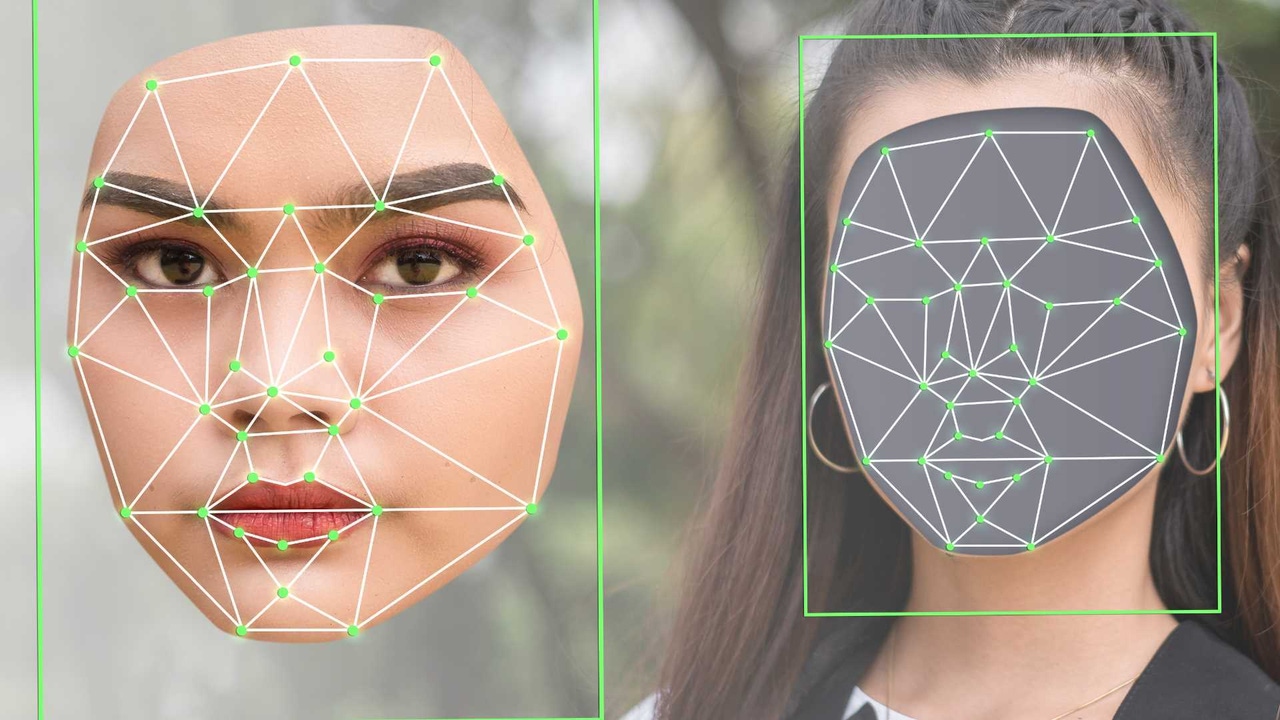

The forgeries created by modern fraud groups use cutting-edge AI-driven deepfake technology to create fake identities, avatars, and documents that even a trained eye would have trouble spotting, says Ofer Freidman, chief business development officer of identity verification and ID management automation company AU10TIX. These groups are using GenAI to steal personal data, craft convincing fake identities, and execute fraud schemes.

Fraudsters acquire personal data from individuals through a combination of methods, including phishing, social engineering, and hacking corporate databases. They can also buy the information from cybercrime marketplaces. They can then use an AI system to randomize the information, such as names, addresses, and document numbers, to generate new fake identities. AI is better at avoiding repetitive patterns than humans, making it more likely that these fake identities will evade detection systems and monitors, Friedman says.

Traditional forgeries could be identified by spotting inconsistencies, such as mismatched shadows or areas that were low resolution compared to the rest. While there are ways to identify deepfakes (such as counting the fingers on the person's hands), each model is getting better at producing uniform, high-quality content.

For fraudsters, every payday doesn't need to be huge because they are operating in an "industrialized, systematic way," Friedman says. "You can go for $1 million or you can go 100 times for $10,000. It's the same effort because it's a machine doing it for you, unlike the good old days when you actually had to do it one by one."

About the Author

You May Also Like

Uncovering Threats to Your Mainframe & How to Keep Host Access Secure

Feb 13, 2025Securing the Remote Workforce

Feb 20, 2025Emerging Technologies and Their Impact on CISO Strategies

Feb 25, 2025How CISOs Navigate the Regulatory and Compliance Maze

Feb 26, 2025Where Does Outsourcing Make Sense for Your Organization?

Feb 27, 2025