Open Source AI Models: Perfect Storm for Malicious Code, VulnerabilitiesOpen Source AI Models: Perfect Storm for Malicious Code, Vulnerabilities

Companies pursing internal AI development using models from Hugging Face and other open source repositories need to focus on supply chain security and checking for vulnerabilities.

February 14, 2025

Attackers are finding more and more ways to post malicious projects to Hugging Face and other repositories for open source artificial intelligence (AI) models, while dodging the sites' security checks. The escalating problem underscores the need for companies pursuing internal AI projects to have robust mechanisms to detect security flaws and malicious code within their supply chains.

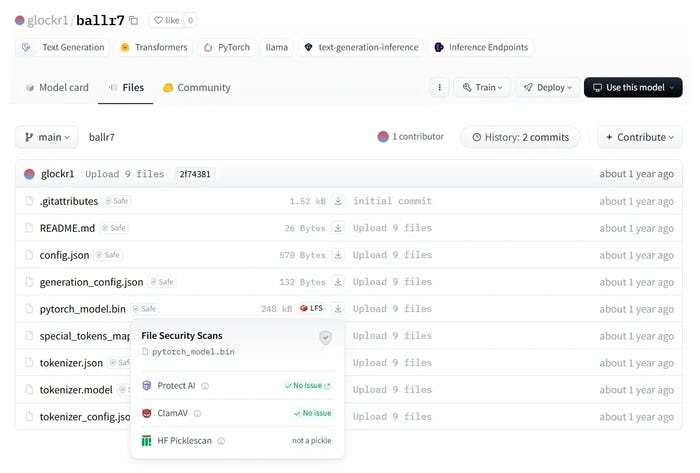

Hugging Face's automated checks, for example, recently failed to detect malicious code in two AI models hosted on the repository, according to a Feb. 3 analysis published by software supply chain security firm ReversingLabs. The threat actor used a common vector — data files using the Pickle format — with a new technique, dubbed "NullifAI," to evade detection.

While the attacks appeared to be proofs-of-concept, their success in being hosted with a "No issue" tag shows that companies should not rely on Hugging Face's and other repositories' safety checks for their own security, says Tomislav Pericin, chief software architect at ReversingLabs.

"You have this public repository where any developer or machine learning expert can host their own stuff, and obviously malicious actors abuse that," he says. "Depending on the ecosystem, the vector is going to be slightly different, but the idea is the same: Someone's going to host a malicious version of a thing and hope for you to inadvertently install it."

Companies are quickly adopting AI, and the majority are also establishing internal projects using open source AI models from repositories — such as Hugging Face, TensorFlow Hub, and PyTorch Hub. Overall, 61% of companies are using models from the open source ecosystem to create their own AI tools, according to a Morning Consult survey of 2,400 IT decision-makers sponsored by IBM.

Yet many of the components can contain executable code, leading to a variety of security risks, such as code execution, backdoors, prompt injections, and alignment issues — the latter being how well an AI model matches the intent of the developers and users.

In an Insecure Pickle

One significant issue is that a commonly used data format, known as a Pickle file, is not secure and can be used to execute arbitrary code. Despite vocal warnings from security researchers, the Pickle format continues to be used by many data scientists, says Tom Bonner, vice president of research at HiddenLayer, an AI-focused detection and response firm.

"I really hoped that we'd make enough noise about it that Pickle would've gone by now, but it's not," he says. "I've seen organizations compromised through machine learning models — multiple [organizations] at this point. So yeah, whilst it's not an everyday occurrence such as ransomware or phishing campaigns, it does happen."

While Hugging Face has explicit checks for Pickle files, the malicious code discovered by ReversingLabs sidestepped those checks by using a different file compression for the data. Other research by application security firm Checkmarx found multiple ways to bypass the scanners, such as PickleScan used by Hugging Face, to detect dangerous Pickle files.

Despite having malicious features, this model passes security checks on Hugging Face. Source: ReversingLabs

"PickleScan uses a blocklist which was successfully bypassed using both built-in Python dependencies," Dor Tumarkin, director of application security research at Checkmarx, stated in the analysis. "It is plainly vulnerable, but by using third-party dependencies such as Pandas to bypass it, even if it were to consider all cases baked into Python, it would still be vulnerable with very popular imports in its scope."

Rather than Pickle files, data science and AI teams should move to Safetensors — a library for a new data format managed by Hugging Face, EleutherAI, and Stability AI — which has been audited for security. The Safetensors format is considered much safer than the Pickle format.

Deep-Seated AI Vulnerabilities

Executable data files are not the only threats, however. Licensing is another issue: While pretrained AI models are frequently called "open source AI," they generally do not provide all the information needed to reproduce the AI model, such as code and training data. Instead, they provide the weights generated by the training and are covered by licenses that are not always open source compatible.

Creating commercial products or services from such models can potentially result in violating the licenses, says Andrew Stiefel, a senior product manager at Endor Labs.

"There's a lot of complexity in the licenses for models," he says. "You have the actual model binary itself, the weights, the training data, all of those could have different licenses, and you need to understand what that means for your business."

Model alignment — how well its output aligns with the developers' and users' values — is the final wildcard. DeepSeek, for example, allows users to create malware and viruses, researchers found. Other models — such as OpenAI's o3-mini model, which boasts more stringent alignment — has already been jail broken by researchers.

These problems are unique to AI systems and the boundaries of how to test for such weaknesses remains a fertile field for researchers, says ReversingLabs' Pericin.

"There is already research about what kind of prompts would trigger the model to behave in an unpredictable way, divulge confidential information, or teach things that could be harmful," he says. "That's a whole other discipline of machine learning model safety that people are, in all honesty, mostly worried about today."

Companies should make sure to understand any licenses covering the AI models they are using. In addition, they should pay attention to common signals of software safety, including the source of the model, development activity around the model, its popularity, and the operational and security risks, Endor's Stiefel says.

"You kind of need to manage AI models like you would any other open source dependencies," Stiefel says. "They're built by people outside of your organization and you're bringing them in, and so that means you need to take that same holistic approach to looking at risks."

About the Author

You May Also Like

Securing the Remote Workforce

Feb 20, 2025Emerging Technologies and Their Impact on CISO Strategies

Feb 25, 2025How CISOs Navigate the Regulatory and Compliance Maze

Feb 26, 2025Where Does Outsourcing Make Sense for Your Organization?

Feb 27, 2025Shift Left: Integrating Security into the Software Development Lifecycle

Mar 5, 2025

_vska_Alamy_.jpg?width=700&auto=webp&quality=80&disable=upscale)