Cybersecurity In-Depth: Feature articles on security strategy, latest trends, and people to know.

Regulators Combat Deepfakes With Anti-Fraud RulesRegulators Combat Deepfakes With Anti-Fraud Rules

Despite the absence of laws specifically covering AI-based attacks, regulators can use existing rules around fraud and deceptive business practices.

As deepfakes generated by artificial intelligence (AI) become more sophisticated, regulators are turning to existing fraud and deceptive practice rules to combat misuse. While no federal law specifically addresses deepfakes, agencies like the Federal Trade Commission (FTC) and Securities Exchange Commission (SEC) are applying creative solutions to mitigate these risks.

"We cannot believe our eyes anymore. What you see is not real," says Binghamton University professor Yu Chen.

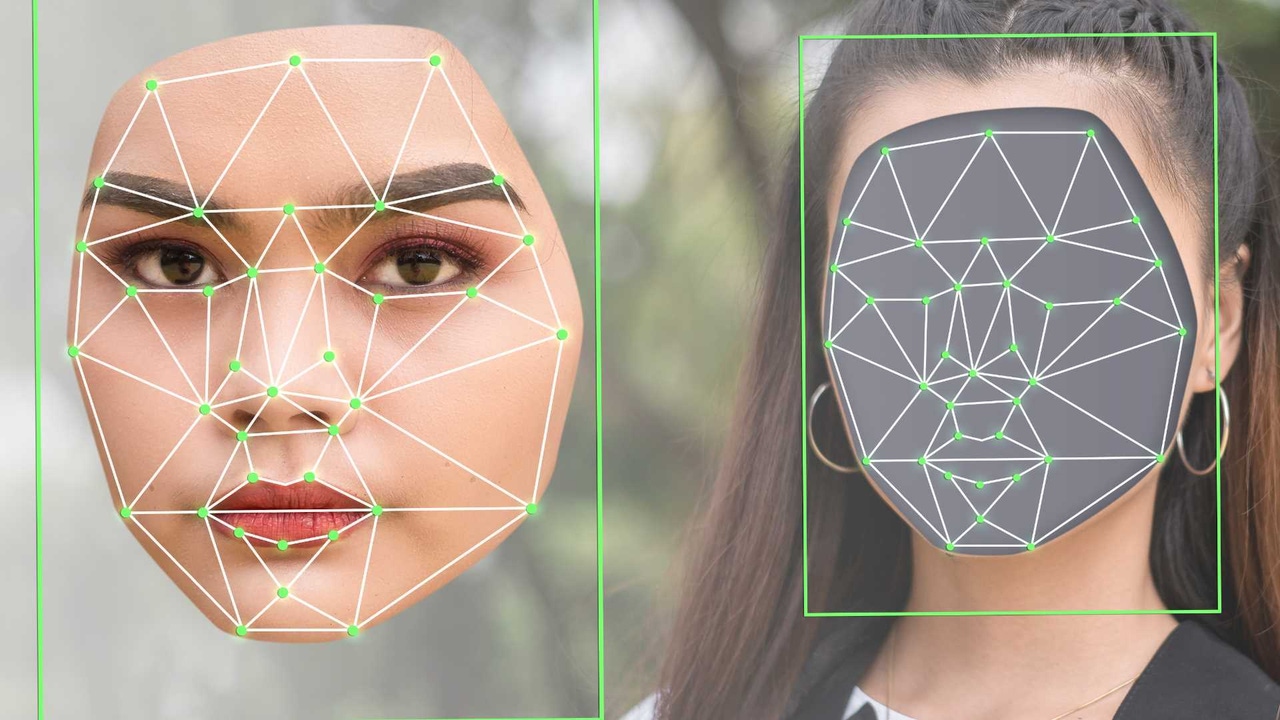

Tools to distinguish between an authentic image and a deepfake are being developed. But even if a user knows an image isn't real, challenges remain.

"Using AI tools to trick, mislead, or defraud people is illegal," said FTC chair Lina M. Kahn in September.

AI tools used for fraud or deception are subject to existing laws, and Khan made it clear the FTC will be going after AI fraudsters.

Intent: Fraud and Deception

Deepfakes can be used for other corporate unfair business practices, such as creating a false image of an executive who announces their company is taking an action — perhaps going out of business or acquiring another company — that could cause stock prices to change. If stock trading is involved, the SEC could prosecute.

When a deepfake is created with the intent to deceive, "that is a classic element of fraud," says Joanna Forster, a partner at law firm Crowell & Moring and former deputy attorney general, Corporate Fraud Section, for the State of California.

"We've all seen the past four years a very activist FTC on areas of antitrust and competition, on consumer protection, on privacy," Forster says.

In fact, an FTC official, speaking on background, says the agency is aggressively addressing the issue. In April, a rule on government or business impersonation went into effect. The agency also is continuing its efforts on voice clones designed to deceive and defraud victims. The agency has a business guidance blog that tracks many of these efforts.

Several state and local laws address deepfakes and privacy, but there is no federal legislation or clear rules that define which agency takes the lead on enforcement. In early October, US District Judge John A. Mendez granted a preliminary injunction blocking a California law against election-related deepfakes. Even though the judge acknowledged AI and deepfakes pose significant risks, California's law likely violated the First Amendment, Mendez said. Currently, 45 states plus the District of Columbia have laws prohibiting using deepfakes in elections.

Privacy and Accountability Challenges

Few laws protect non-celebrities or politicians from a deepfake violating their privacy. The laws are written so that they protect the celebrity's trademarked face, voice, and mannerisms. This differs from a comic impersonating a celebrity for entertainment's sake, where there is no intent to deceive the audience. However, if a deepfake does try to deceive the audience, that crosses the line of intent to deceive.

In the case of a deepfake of a non-celebrity, there is no way to sue without first knowing who created the deepfake, which is not always possible on the Internet, says Debbie Reynolds, privacy expert and CEO of Debbie Reynolds Consulting. Identity theft laws might apply in some cases, but Internet anonymity is difficult to overcome.

"You may never know who created this thing, but that harm still exists," Reynolds says.

While some states are looking at laws specifically focusing on the use of AI and deepfakes, the tool used for the fraud or deception is not significant, says Edward Lewis, CEO of CyXcel, a consulting firm specializing in cybersecurity law and risk management. Many corporate executives do not realize how easy deepfakes and other AI-generated content are to create and distribute.

"It's not so much about what do I need to know about deepfakes; it's rather who has access and how do we control that access in the workplace, because we wouldn't want our staff to be engaging for inappropriate reasons with any AI," Lewis says. "Secondly, what is our firm's policy on the use of AI? What context can or can't it be used for, and who actually do we grant access to AI so that they can carry out their jobs? It's much the same way as we have controls around other cybersecurity risks. The same controls need to be considered in the context of the use of AI."

As AI-generated deepfakes become more sophisticated, regulators are working to adapt by leveraging existing fraud and privacy laws. Without federal legislation specific to deepfakes, agencies like the FTC and SEC are actively enforcing rules against deception, impersonation, and identity misuse. But the challenges of accountability, privacy, and recognition persist, leaving gaps that both individuals and organizations need to navigate. As regulatory frameworks evolve, proactive measures — such as AI governance policies and continuous monitoring — will be essential in mitigating risks and safeguarding trust in the digital landscape.

About the Author

You May Also Like

Uncovering Threats to Your Mainframe & How to Keep Host Access Secure

Feb 13, 2025Securing the Remote Workforce

Feb 20, 2025Emerging Technologies and Their Impact on CISO Strategies

Feb 25, 2025How CISOs Navigate the Regulatory and Compliance Maze

Feb 26, 2025Where Does Outsourcing Make Sense for Your Organization?

Feb 27, 2025